Occlusion

When encountering Pictorial Cues, one must always be careful when considering Occlusion. As Pictorial Cues go, Occlusion isn't very useful; he only turns up whenever something in a person's field of vision obstructs her view of another object. He's developmentally retarded that way, but don't tell it to his face; giving obstructions the power to assert their proximity over occluded targets is all he ever wanted to do with his life.

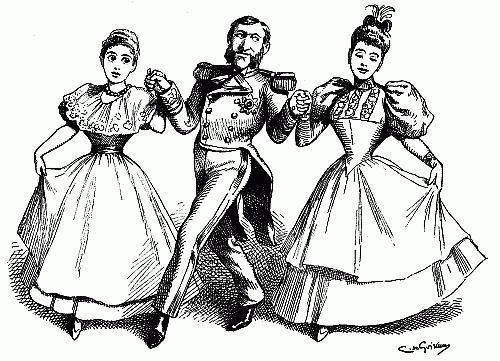

Here are several amusing pictures; Occlusion may not be very helpful, but he sure can work a crowd.

|

| The cowboy is in front of the woman. |

|

| The snake is in front of Van Damme's fist. |

|

| The child is further than the Ewok. |

|

| The lady is farther afield than the man's hand. |

|

| The Velociraptor is behind the post. |

|

| Samuel L. Jackson is behind the drink. |

|

| Mikki is in closer proximity to the camera, when compared to Allen. |

Basically, the cue of relative height means that objects that are below the horizon and have their bases higher in the field of view are usually seen as being more distant. In this picture of the Beatles on Abbey road, we can see that the Beatles are of the same distance because their bases, their feet, are of the same height. If we look at the white car and black car we can see that the black car is a bit farther because the base of the black car, its fender, is higher in the field of view than the white car.

Relative Size

The cue of relative size means that when two objects are of equal size, the one that is farther away will take up less of your field of view than the one that is closer. This cue can be seen in the image above. Notice for example the number eight ball and the number four ball, we know that the two balls are of equal size but the number four ball looks smaller and takes up less of our field of view because it is farther as compared to the number eight ball.

Perspective Convergence

In a picture showing depth, two parallel lines that stretch out into a picture appear to come closer and closer together as they extend into the distance. In this picture for example, the lines on the road are actually parallel, but in illustrating the depth and distance in the picture, they look to be coming closer towards each other the father out they extend.

Familiar Size

Using prior knowledge about the size of objects to judge their distance is using familiar size cues. Simply put, under certain conditions, our knowledge of an object’s size influences our perception of its distance. In the example, we see the Eiffel Tower and a person. Based from prior knowledge, we know that the Eiffel tower is very big, around 320 m tall, and that the average person stands at only about 1.5-2 meters. This suggests that the Eiffel tower is so far behind the person to look that small.

Atmospheric Perspective

Atmospheric Perspective occurs when objects that are farther or are more distant appear less sharp and seem blurred with a slight blue tint. The farther the object is the more particles (air, dust, water particles, etc.) there is that we have to look through. In the following examples, the objects that are nearer are sharp with clearer details compared to the objects farther away.

Texture Gradient

A texture gradient can be used to show depth in a still frame. In this picture, we know that the flowers are all actually equally spaced and distributed in the field. But as the field extends into the distance, the flowers appear to be more tightly packed than the flowers that should be closer to the viewer. This gradient of texture creates an image of depth.

ShadowsShadows are created whenever light is occluded by an object. The dimensions of the resulting projection can be processed using the Pythagorean theorem to compute for the object's size. This is very useful for measuring tall, well-lit objects like flagpoles; many a high school student has successfully applied the teachings of Math to such a worthy assignment.

Shadows are also pretty useful when assessing the contours of an observed object. The variation in projected shadow size and intensity can do very much for 3D perception.

|

| Shadows really bring melee combat to life, don't they? |

| |

| Godzilla sure looks big. Thank lighting for the neck-shadow! |

|

| A lightsaber will help shine the way. |

|

| Big shadow = big Decepticon. |

|

| The Shadow: Bringing depth to 2D art since a long time ago, in a galaxy far, far away. |

|

| Even without familiar objects for comparison, you know these AT-ATs are big. Thank the shadows! |

During car rides, people are fond of looking out their windows to enjoy the scenery outside. As experienced in one of my night rides, it can't be helped but to notice how the different colored lights on the portions of nearby buildings on my side of the road seem to blur as they move past by me. However, the moon seems to move at a slower rate; the same can be said for the mountains on the horizon. How is this possible when they seem to lie on the same plane of vision?

Motion Parallax is the key to understanding this perceptual phenomenon. The use of this depth cue in cartoons may provide an explanation.

We came across this interesting short clip of a mouse using a rocket to fly over varied terrain.We can observe how the trees, tall shrubs, or grass (I don’t exactly know what they are) seem to glide rapidly past by our vision compared to the clouds and mountains that comprise the distant background. Differences in the speed by which we see objects when we are moving can be accounted for by our retinas. As we are subjected to motion, the image of nearby objects, in this case the tall shrubs, seem to move more rapidly past by us because they travel at a farther distance across our retinas compared to distant objects such as the moon and the mountains.

Many computer games and movies have made use of this depth cue to enhance movement perceptions in scenes. Commercial value is improved as they become more entertaining to those who view these technological advancements using motion parallax.

To learn more about Motion Parallax, you can visit this site: http://psych.hanover.edu/Krantz/MotionParallax/MotionParallax.html

Deletion and Accretion

Because of academic and other kind of stress(es), I closed my eyes and play with my hands. I positioned my hands as my right hand is at arm's length and my left hand is at about half the distance, just to the left of the right hand. And as I moved my head sideways to the left and then back again while keeping my hands still, covering rgiht hand (deletion) and uncovering it (accretion) are observed.